Performance analysis through the agile lifecycle

Performance analysis throughout the agile lifecycle helps teams make smarter, evidence-based decisions

Performance analysis is an important part of knowing how well (or not) your project or service is doing, whatever stage of the agile lifecycle you’re at.

I’ve seen a lot of approaches to performance monitoring through Discovery, Alpha, Beta and to Live. Some that work well and some that don’t. Bad performance monitoring is bloated, rigid and ineffective. Good performance monitoring is targeted at the amount and type of information necessary for the person looking at the data, self-service and focused on impact.

There are lots of product goal frameworks out there. This post is about an approach that can flex with the agile lifecycle phases and that works when there isn’t much performance analysis resource available.

Performance analysis for established products

For me, performance analysis when it comes to a live product, is about setting up a way of identifying when a product or service is working well and when it’s not. From there, you should expect to do more investigating to understand the problem, come up with solutions and then see what the effect is.

You could think of it as a doctor regularly checking a patient’s vitals to get an idea of when and where a problem might be. From there, they might do more tests or suggest changes to a patient’s diet. Then, when they feel they have a good enough understanding, they recommend a treatment and monitor.

To work in this way, an organisation needs to understand what the most important health checks are to do on their products or services. What are the signs that things are ticking over nicely or that something is going awry?

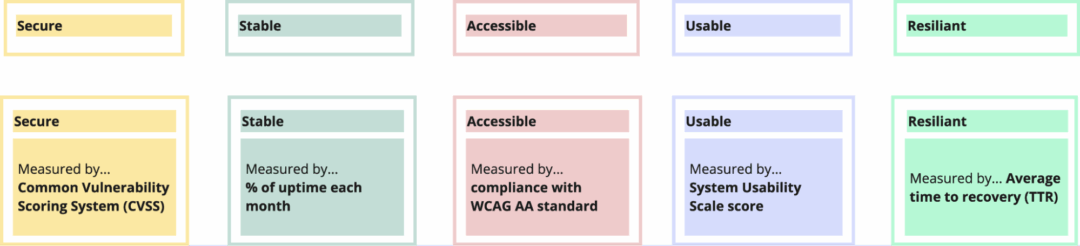

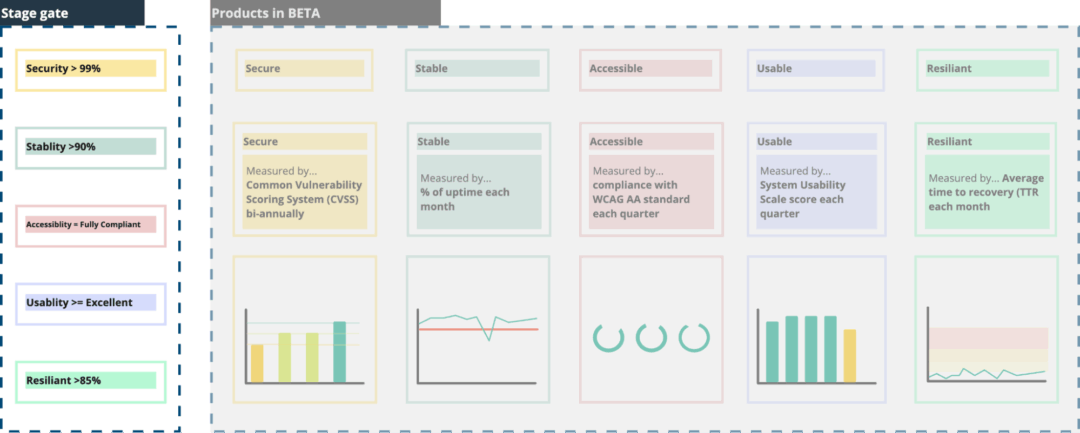

Here’s an example. An organisation has a digital product that manages applications. Its senior leadership identifies that, at a minimum, all digital products need to be: Secure, Stable, Accessible, Usable and Resilient. We’ll call these the ‘indicators’.

Working with performance analysts, they decide how these should be measured and reported on at a high level. We’ll call these the metrics.

For more critical indicators, you could decide to have multiple metrics to give you a sense of validity in the results.

The performance analysts then work with the leadership team to understand what the most meaningful way of showing this information is. This often involves conversations with other teams who might be owners of that data. For example technical operations teams will typically have data on up-time and security. Rather than duplicating, performance analysts should aim to reuse these.

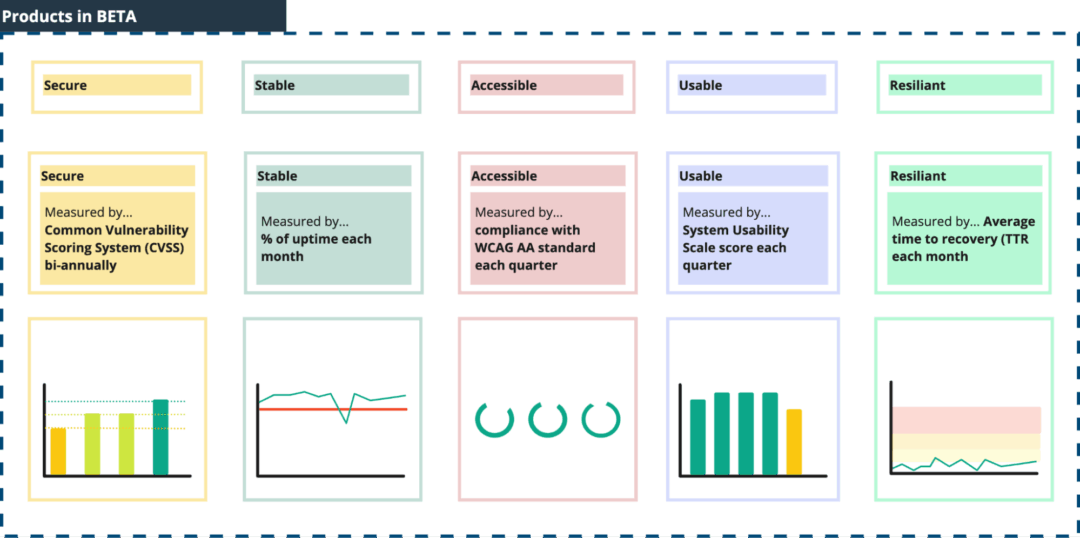

Above are examples, more can be added but it’s important to really focus on what information is needed and by who. Senior leadership should be interested in high level indicators of how a product is doing. Therefore, at a glance they should be able to see if/when issues crop up.

Performance analysts can then build out other data visualisations for other members of the team, drilling down into more of the detail. For example, the dip in uptime (shown above) could be related to a spike in usage. A performance analyst could add a usage report to give context to the stability report.

This setup works well for established digital products. Now I’ll look at what role performance analysts can play in the development stage, starting with the transition from alpha to beta.

Performance analysis and the transition from alpha to beta

It’s often not clear what decides whether a product can move from an ‘Alpha’ state to a ‘Beta’ state. Sometimes it comes with the product moving from the product team who built it to a different team who will maintain and iterate it.

This process could be better formalised and supported by performance analysis. With an understanding of the priorities of an organisation, performance analysts could identify products ready to transition to a later development stage. Depending on the organisation’s risk comfort level, thresholds would be set for each indicator. For example, the threshold for security and stability could be set high while usability and accessibility could have lower thresholds with the expectations that these are prioritised by the team once the transition has happened.

Performance analysis in early stage product development

Going back another step, as a product team is building out a product’s functionality, performance analysts can establish and set up ways of monitoring the key indicators. In its early stages, it’s expected that products won’t have high levels across the indicators but instead that they’ll improve over time. This in itself tells a good story of iterative improvement and prioritisation, showing agile principles in practice. For organisations that require service assessments, this can be good evidence to give to assessors.

Typically, these indicators would be separate to a product teams’ day-to-day way of planning and delivering work. The latter might include backlog items, Objectives and Key Results (OKRs), or goals.

Indicators are things that are expected to be monitored long-term, with appropriate fluctuations. They are typically higher level and the responsibility of a central function like performance analysis. Backlog items, OKRs or goals are things that are expected to be shorter term and are either met or not met. Once they are met, they will no longer be monitored. These are typically more detailed and the responsibility of a product team.

Often teams don’t have an embedded performance analyst, but they should be able to engage a central performance analysis resource to help them.

To sum up, incorporating performance analysis throughout the agile lifecycle helps teams make smarter, evidence-based decisions at every stage of product development. Whether you’re just getting started in discovery or maintaining a more mature product, having the right indicators, metrics, and visualizations in place makes sure you can spot issues early, track meaningful progress, and focus efforts where they matter most.